Multilingual resource creation

Cross-lingual resource induction

Manual creation of high-quality language resources (Treebanks, lexicons) and NLP processing tools (such as taggers, parsers) for multiple languages is a tedious and costly task, and thus prohibitive to undertake for multiple languages. The technique of annotation projection exploits parallel word-aligned corpora to induce target language annotations using automatically assigned source language annotations as a basis. We have applied this technique for the induction of a temporal labeler for German, by projecting TimeML markup from English annotations created with the TARSQI TimeML labeling toolkit. We further induced an f-structure bank for Polish by projecting English LFG f-structure information to a word-aligned Polish section of the JRC-Acquis corpus. Find out more here.

Building a large multilingual Named Entity Resource from Wikipedia

Research in NLP and IR has shown in recent years a growing interest in extracting information for entities such as, for instance, people (cf. state-of-the art research on recognizing people across Web pages, a.k.a. the Web People Search Task). As part of our ongoing effort to develop machine-readable resources for a multitude of languages, we developed a multilingual database of Named Entities from Wikipedia, called HeiNER, which includes critical information for named entity disambiguation such as multilingual transliterations and their contexts of occurrence. Find out more here.

Lexical semantic acquisition and processing

Learning adjective semantics for ontology induction and search

One important function of adjectives used as modifiers in natural language is that they elicit certain properties of nouns or establish relations between nouns. Therefore, adjectives can provide valuable information (both) for ontology learning (and for natural-language based search). In ontology learning, the goal is to induce concepts whose instances share the same or at least similar properties, and the most prominent semantic relations between them. In our work, we develop machine-learning methods that automatically classify adjectives according to their inherent semantics in order to improve ontology learning.

Knowledge extraction from Wikipedia

The availability of large repositories of collaboratively generated semi-structured knowledge such as Wikipedia has brought back into the spotlight the feasibility of automatically acquiring wide-coverage knowledge resources. In an attempt to further develop previous seminal work on extracting machine-readable knowledge from Wikipedia, we are currently working on integrating it and improving it by leveraging other computational knowledge sources such as the semantic lexicon provided by WordNet. The objective is the development of a knowledge repository where various kinds of knowledge (i.e. encyclopedic, lexicon-semantic) are harmoniously integrated to provide a multi-faceted and broad-coverage semantic back-end for a variety of knowledge-lean NLP systems.

Fine-grained Named Entity Recognition and Classification

Named entities such as Picasso, Nefertiti or Paris can be sub-classified into very fine semantic entity classes, akin to word senses. We have developed a data-driven approach for automatically acquiring high-quality labeled data sets consisting of names, their corresponding entity class, and contexts of occurrence, based on shallow processing. This data used to develop a Maximum Entropy classifier that sub-classifies named entities at high levels of precision. Our initial experiments suggest that fine-grained named entity recognition and classification is harder than word sense disambiguation, but can be performed at higher performance levels, given that we can acquire high-quality training data. Learn more about this in our ACL-NEWS paper (Ekbal et al. 2010) and an invited talk presented at Konvens 2010.

Recognizing and processing generic expressions

Linguistic theory distinguishes two variations of generic expressions:

Generic noun phrases are phrases that refer to a kind instead of a specific entity. In the sentence "Birds fly.", the noun birds is used to refer to the species of birds and not just to a group of birds. When generic noun phrases, we describe properties of kinds that can not necessarily be found in individual instances of a kind. Moreover, predications over kinds can be considered true although they do not necessarily hold for every instance of the kind. So "Birds fly." is considered true although penguins, which are birds, do not.

Generic sentences are used to make assertions about rule-like or habitual facts or events. Similarly to generic noun phrases, there may also be exceptions. The sentence "Mary smokes after dinner." does not entail that Mary smokes after every dinner. But it is a habit for Mary to smoke after dinner.

We are currently investigating how to model these formal semantic distinctions with data-driven statistical methods in order to automatically identify generic expressions in texts. The acquired uncertain knowledge can be used to construct and populate ontologies in a data-driven way.

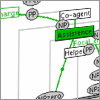

Discourse processing: co-reference resolution and discourse-level event processing

Recent advancements in the field of Natural Language Processing have enabled complex tasks which aim to process textual input at the global (and more complex) level of documents. Discourse-level semantic analysis is crucial for advanced information access as well as automatic natural language understanding and text generation technology. Among the various discourse-level phenomena, we focus our research in multilingual co-reference resolution and discourse-level event processing. In the area of co-reference resolution we take previous efforts one step further by extending the machine-learning based toolkit (BART) for performing co-reference resolution in languages other than English. Our SFB 619 project on ritual structure and variation investigates the extraction and analysis of event sequences, whereas the SiGHTSee project deals with the presentation of events in instructional way-finding tasks. Find out more here.

Ontologies and NLP

We have developed techniques for ontology-based information extraction in the SmartWeb project (see Buitelaar et al. 2008), as well as ontology population for specialized domains (see Reiter et al. 2008). Methods for the automatic enrichment of ontologies with linguistic information were explored in Reiter and Buitelaar (2008, 2009).

In previous work we investigated techniques for multi-lingual question-answering from structured knowledge bases, building on deep syntactic and semantic analysis, ontology-based domain modeling and inferencing techniques (cf. Frank et al. 2005, 2007).

Ontology-based modeling was successfully used in previous work for encoding an integrated corpus and lexicon model in OWL for frame-semantic annotations in the SALSA project (cf. Burchardt et al. 2008a,b).

NLP applications

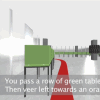

Natural Language Generation for way-finding instructions

Many people worldwide are familiar with mobile navigation systems and the simple instructions they produce. However the limited vocabulary used in such systems often causes confusion and misleading utterances. To solve these problems, our work aims at analyzing directions given by human instructors and applying their linguistic strategies to generate natural language directions. The results of this research can help to make mobile navigation systems more natural to use and easier to understand.

GIVE challenge

For the second installment of the GIVE challenge, we developed a NLG architecture that generates instructions to guide users through a virtual 3D environment. The aim of this challenge is to evaluate and improve natural language generation systems on a large scale using feedback of online participants.

Interaction of language and vision

Advancing automatic semantic processing to performance levels corresponding to human language capacity necessitates exploration into related disciplines, such as cognition, artificial intelligence, or visual processing. We are engaging in interdisciplinary research that aims at deepening our understanding and refining computational modeling of semantic processing. One of these areas is the study and modeling of the interaction of language and vision. We are investigating the interaction of language and vision in instructional contexts in the SiGHTSee project.

NLP for cultural heritage and social sciences

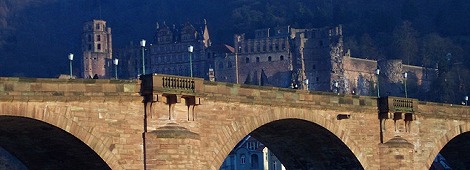

Building a translingual searchable database from a multilingual bibliography

In an interdisciplinary project with the Heidelberg Research Architecture of the Excellence Cluster Asia and Europe in a global context we are building a searchable online bibliographic database for the Turkology Annual, a systematic bibliography for Turkology and Ottoman Studies. The underlying 26 printed volumes contain references to books, articles, reviews, and conferences in more than 20 mostly non-Western languages like Russian, Turkish, Arabic, or Japanese. We apply computational linguistics methods for automatic language identification, named entity recognition and language-aware OCR correction, to automatically process highly multi-lingual bibliographic entries. Next to the refinement of computational linguistics techniques, our aim in this project is to develop tailored computational linguistics methods and workflows for the eHumanities, thereby promoting the use of IT in the social sciences. Find out more here and on our project homepage.

Analyzing event structure for ritual structure research

We are collaborating with scientists from anthropology, in particular indology, in an interdisciplinary project on ritual science, where we complement the traditional research methods prevalent in the humanities with computational linguistics analysis methods. We are employing data-driven approaches to detect regularities and variations between rituals from various cultures and epochs, based on semi-automatic semantic annotation of ritual descriptions and automatic analysis of the extracted event structures, thereby offering ritual scientists new views on their data. By creating structured and normalized semantic representations for textual descriptions of rituals we will offer ritual scientists querying functionalities that allow them to test and validate their hypotheses empirically against a structurally analyzed corpus. For Computational Linguistics research, the project allows for the detailed investigation of techniques to compute event chains as representations of complex action sequences. Find out more here.